-

• #61253

Just realised what I did: meant to write big, wrote bit - sorry.

@Mickie_Cricket and @JurekB you were right to call me out on that. Phone typo. Edited.

-

• #61254

🎶 If you're racist and you're fired it's your fault 🎶

https://www.mirror.co.uk/sport/football/news/breaking-burnley-fan-behind-white-22246694

-

• #61255

meant to write big, wrote bit

Sorry, actual lol there

-

• #61256

I wasn't aware that there was a sliding scale to racism.

I don't get this - I want to say you can be a bit or a big (like, everyone's at least a bit), and similarly you can act in bit or big racist ways. But I feel like your question was rhetorical...

I don't know that it's helpful to think about being 'squeaky clean' - we're all in this society and this system and every day you make choices. (I feel like I'm missing your point.) -

• #61257

Ha! I just thought you were using comedy understatement!

-

• #61258

I assumed everyone used as a comedic reference from the Avenue Q song:

-

• #61259

Squeaky clean is the wrong expression to have used.

I'm not going to say 'woke' cos I'm too old.

'Having a degree of sensitivity and understanding' is my best shot - but is too much of a mouthful

And the question was rhetorical.

Peace and love. -

• #61260

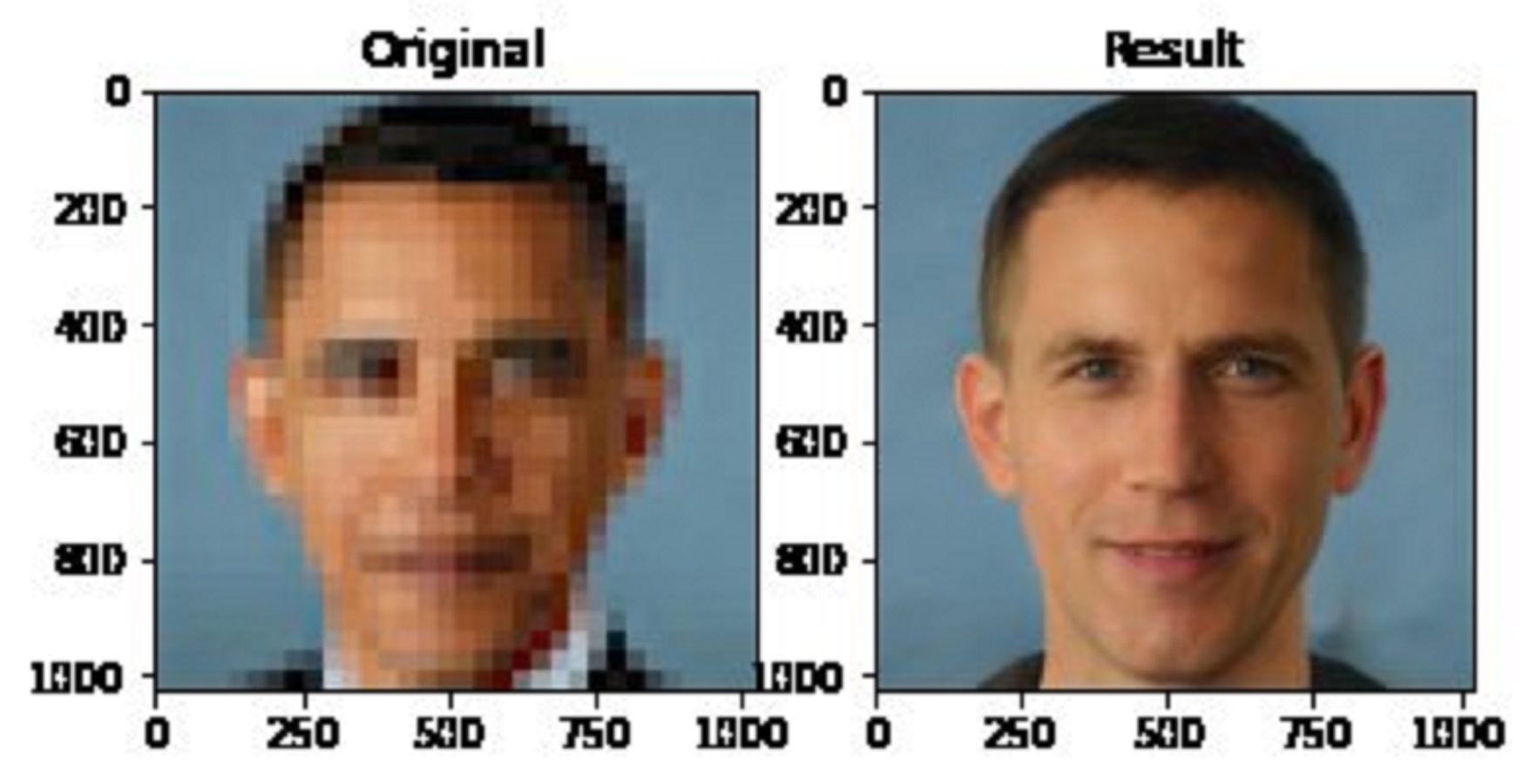

AI, everybody. Its totally fine and unbiased in any way shape or form.

Denis Malimonov is the programmer behind Face Depixelizer, and in an email to Motherboard said the tool is not meant to actually recover a low-resolution image, but rather create a new and imagined one through artificial intelligence.

“There is a lot of information about a real photo in one pixel of a low-quality image, but it cannot be restored,” Malimonov said. “This neural network is only trying to guess how a person should look.”

-

• #61261

Surely if it's a neural network its heavily dependant on what data it's been trained on right? So there's always going to be an inherent bias as a result of what data has been chosen for it to be trained on.

Haven't yet read the article by the way so apologies if I'm way off the mark as a result.

Edit: Should've read the article, last paragraph here:

“Dataset is generally biased which leads to bias in the models trained. The methodology can also lead to bias,” Jolicoeur-Martineau said in an email. “However, bias inherently comes from the researcher themselves which is why we need more diversity. If an all-white set of male researchers work on project, it's likely that they will not think about the bias of their dataset or methodology.”

-

• #61263

.

1 Attachment

-

• #61264

Is it an antisemitic conspiracy theory?

This is what the article said:

"Born in Bolton to a lorry driver father and care worker mother, Peake is strident and expressive; if religion wasn’t anathema to her, she’d be perfect in the pulpit. “Systemic racism is a global issue,” she adds. “The tactics used by the police in America, kneeling on George Floyd’s neck, that was learnt from seminars with Israeli secret services.” (A spokesperson for the Israeli police has denied this, stating that “there is no tactic or protocol that calls to put pressure on the neck or airway”.)

Someone who is talking in an article about Labour politics should probably stay well away from tangential links to Israel. And it does sound a bit like they're trying to blame Israel for making American police violent or racist.

But it's not disputed that many US police forces train in Israel. The Anti-Defamation League have paid for it for two decades: https://www.haaretz.com/us-news/.premium-u-s-police-departments-cancel-participation-in-israel-program-due-to-bds-pressure-1.6701898

So it sounds like Maxine Peake has an anti-Israel bias that has caused her to say something contentious that you couldn't really prove without knowing what the extent of the Israeli-US police cooperation is. Is that enough to warrant the description of "antisemitic conspiracy theory?"

-

• #61265

Is it an antisemitic conspiracy theory?

It doesn't matter, someone in Labour linked to something that mentioned Israel so the media will say it and they are antisemitic.

-

• #61266

I'm no RLB fan, but this seems harsh.

-

• #61267

Apparently the Indie posted a correction to that piece since RLB retweeted it. The correction was to the bit that got her in trouble. No idea what it said originally though.

-

• #61268

Keir Starmer is in a bind either way though - sacking her means potentially losing more support on the Labour left, and appearing to confirm the idea that Labour is filled with dangerous anti-semites. Not sacking her would have meant that there could be fresh allegations that concerns about anti-semitism not being dealt with.

If only there was a clear, non-contentious definition of anti-semitism that everyone could agree on.

-

• #61269

I think the correction is the bit in brackets in the quotation that I posted.

-

• #61270

So ready to sack a woman. A male politician would have been given time to 'reflect' and apologise

-

• #61271

I don't think so, whoever did it would have been booted out, whether it's really fair or not, no one is going to be allowed to let all that shit be dragged up again. It also, perhaps coincidentally, provides a handy contrast between Johnson's attitude to Cummings and Jenrick and Starmer's approach.

-

• #61272

There is obviously a problem there, but there are actually loads of different problems layered into it. Fundamentally, there is a many-to-one issue which results from the loss of information when you pixelate. I'm no expert on AI, but if you train on a dataset that's representative of the population it may always avoid producing faces from a minority group.

Facial characteristics can (under some models) be represented as a deviation from a gender/ethnic norm. So if you try to infer ethnicity and gender first you may get a higher likelihood of a correct outcome, but then there's the issue that you have an unknown lighting source, which means that skin tone is really hard to read.

Essentially this is a great example of something that humans are good at and computers aren't (yet). I suspect, however, that the example of Barack Obama is fooling us as to the size of that disparity because it triggers a whole load of contextual information for our recognition that computers have no access to.

-

• #61273

Such a contrast to this situation:

https://www.theguardian.com/politics/2020/jun/25/no-10-backs-housing-secretary-despite-fresh-revelations-over-developmentedit: Ah, I see Will just beat me to it

-

• #61274

https://thegradient.pub/pulse-lessons/

Some more discussion on how the community responded to this

-

• #61275

I don't know if this is true.

Firstly I wouldn't be surprised if there's an incredible explicit message in the shadow cabinet that there's to be no mention of Israel whatsoever. For RLB specifically, it's her job to hold to account the govts education record (something she should be making hay with right now) - I'm not sure why it was necessary to reference Israeli police training - even if it's not an anti-Semitic reference then it's still a risk to post it and doesn't reflect well on her judgement for doing so. .

Secondly, I've not seen much evidence from Starmer thus far that he'd have treated a male colleague any differently.

JurekB

JurekB Fox

Fox hoefla

hoefla Stonehedge

Stonehedge hugo7

hugo7 branwen

branwen mmccarthy

mmccarthy Sumo

Sumo WillMelling

WillMelling

tbc

tbc moocher

moocher EB

EB Lebowski

Lebowski yoshy

yoshy christianSpaceman

christianSpaceman @Platini

@Platini

Knowing @fox as I do, I suspect he was just using understatement as a form of humour given that its clear that discriminating against "black mamas or pakistanis" is obviously very racist indeed.