-

• #377

what happens when societies worship STEM to the extent that there's hardly anyone in power with the skills and knowledge provided by social sciences and humanities education any more. No one is able to call bullshit when presented with "science".

I'd say if anything the opposite is true (in the UK at least and it doesn't seem much different in the US). Lots of people in charge with no STEM background, our whole cabinet has one person.

-

• #378

I read it as being in reference to the SLT of private tech companies. I'd also agree with your point as the opposite side to the same coin and say there's a multipier effect of the two mismatches.

But the I've just been listening to the Centerist Dad's interview Nick Clegg.

-

• #379

So how are you guys preparing for a post labour economy? I haven't been able to stop thinking about what the world will look like when most jobs are gone. I've (hopefully) got ten years of work left, I may miss the big shift but I've seen some postulate that it may come even sooner than that. Thoughts?

-

• #380

I'm not, because, IMO, it's not coming any time soon. LLMs have already hit the wall between just looking as if they'll change our world and actually being able to change it non-trivially. Again, there's a chasm between very sophisticated auto-completes and actual human-like intelligence, and no evidence that that chasm will be crossed soon using current approaches. Everyone who says otherwise has a vested interest in the AI grift.

-

• #381

So you're saying don't believe the hype? Is that all it is? I know there is a lot of hype, gotta grab those investor dollars while you can. There's talk of AGI being very close, maybe it being here already. I have no idea, I'm not an AI guy but I am interested in how it will impact society.

-

• #382

D'ya reckon it'll be more Logans Run than Hunger Games?

Either way I'm thinking I'm the wrong side of the class divide to enjoythe outcome much.

-

• #383

I think the widespread disruption to many occupations is a) over-hyped and b) some way off still. Current AI models are unable to reason, until they can, and no-one really knows when that will happen, then they will always need human support.

Take self-driving cars, for example, which we've been assured is two years away for most of the last ten years. Full automation is still years away, and what we get instead is features, like lane assist and self-parking, that can do some of the more mundane tasks but still require human intervention when necessary.

-

• #384

Get a real job, and get paid very little for it because everyone will need to do it.

-

• #385

Quite. Both the hypes around 'self-driving' cars a few years ago, as well as that around 'AI' were designed to introduce, and soften up, the world to a type of product, not a specific achievement or anything market-ready, way before the technology is mature (if it ever will be). The primary official selling-points revolve around human well-being, e.g. 'AI will cure cancer' or 'self-driving cars will eliminate crashes'. As people often trust such information, many now believe that these innovations are much more advanced than they really are, and indeed that they are desirable.

All indications are that they're just bad ideas, expressions of a particularly nonsensical branch of futurism whose only end result could be vastly-increased injustice. We need less automobilism, not more, and we need fewer resources spent on running wasteful, badly-programmed oceans of code, not more.

The problem is that many people think these are all steps towards the great technology we need to enable interplanetary and eventually interstellar space travel, which as things are going is destroying the Earth first.

-

• #386

I agree with everything Oliver said.

-

• #387

^ AI bot

-

• #388

-

• #389

I am with andyp

-

• #390

However...?

-

• #391

I disagree with Oliver. I think AI can assist humanity in ways which we've barely begun to explore so far, particularly in medicine. My bigger concern is around an ethical framework for AI, which requires responsible governments and companies to collaborate and agree a set of international rules that binds everyone.

Sadly, I don't see that ever happening, especially with the power of the US Military-Industrial complex.

-

• #392

My bigger concern is around an ethical framework for AI

That ship has sailed, no? The media were having kittens about this a little while ago but that anxiety seems to have died down now.

Going back to my original point, how will the world look when our labour is worthless and the social contract is broken? What will we do with ourselves? Utopia or dystopia?

-

• #393

-

• #394

I shared this a while back but not in this thread I think, well worth a watch.

-

• #395

Sadly, I don't see that ever happening, especially with the power of the US Military-Industrial complex

There are high risk sectors that are effectively regulated and state controlled. Nuclear weaponry. Gain of function research.

The main reason this area isn't regulated is Big Tech are incredible lobbyists.

Someone needs to modify this cartoon to read, sure we destroyed humanity and the planet, but for a brief moment we made Sam Altman unbelievably rich and powerful.

-

• #396

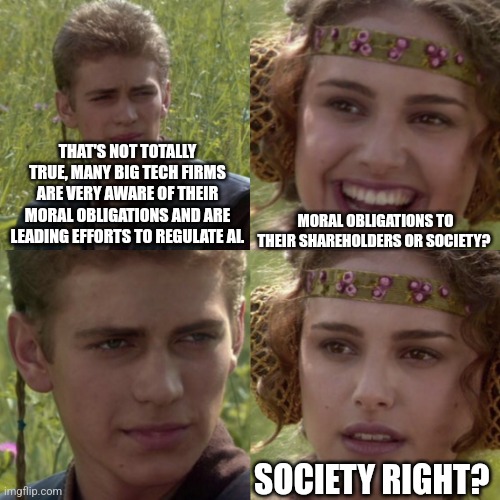

The main reason this area isn't regulated is Big Tech are incredible lobbyists.

That's not totally true, many big tech firms are very aware of their moral obligations and are leading efforts to regulate AI.

-

• #397

Lobbyists got to you, huh?

Jokes aside, which ones and how?

-

• #398

-

• #399

https://www.nvidia.com/en-gb/ai-data-science/trustworthy-ai/

https://aws.amazon.com/ai/responsible-ai/

https://ai.google/responsibility/principles/

https://newsroom.arm.com/blog/arm-ai-trust-manifestoIt's a known issue in the industry that CEOs and their boards know they have to address, because if they don't then Governments will force them to.

Look at device security, lots of manufacturers are selling devices that are laughably easy to compromise. The industry has tried to address this but too many rogue companies have ignored it, so now there is legislation either in place, like the UK PSTI Act, or coming, the EU's Cyber Resilience Act, and it's going to have a massive impact on companies, i.e. Hoover have disabled their connected device service in the UK because it doesn't meet the PSTI requirements.

I know it's fun to dunk on Tech Bros, but there are a lot of people and companies that are acutely aware of their societal responsibilities and there is a long track record of successful industry-wide collaboration to improve and standardise areas of concern.

-

• #400

Story in the Times this morning of how two Harvard students have married AI facial recognition, a number of databases and Meta Glasses. They've tested them by walking round metro, identifying people and talking to them as if they knew them.

Obviously it's started some privacy concerns.

hugo7

hugo7 RonAsheton

RonAsheton E11_FTW

E11_FTW rhb

rhb andyp

andyp snottyotter

snottyotter Oliver Schick

Oliver Schick t-v

t-v Lebowski

Lebowski amey

amey

sohi

sohi ELbowloh

ELbowloh @skydancer

@skydancer

Thought this comment was pretty solid.

Having worked for a lot of my life with entrepreneurs I shouldn't be surprise by how much they're all winging it, but it still does. There are so many downsides to modern public companies - but you also see why the rules and culture try to turn them into these massive beasts. Silicon Valley though seems to love staying true to their roots of selling the sizzle and always over-promising and under-delivering.